This is a panel discussion on the topic of artificial general intelligence (AGI) and features several prominent thinkers: Elon Musk, Stuart Russell, Ray Kurzweil, Demis Hassabis, Sam Harris, Nick Bostrom, David Chalmers, Bart Selman, and Jaan Tallinn. With Max Tegmark as moderator. They discuss the likely outcome of achieving human-level AGI.

It was organized by the Future of Life Institute, a volunteer-run research, and outreach organization in the Boston area that works to mitigate existential risks facing humanity, and particularly existential risk caused by artificial intelligence.

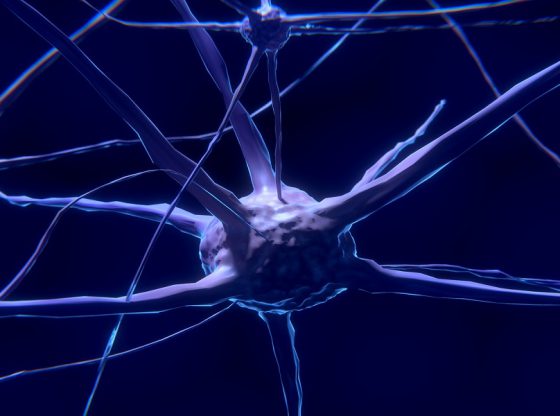

The general in artificial ‘general’ intelligence (aka “strong AI”) refers to its broad application being similar to that of the human intellect, being able to successfully perform any intellectual task a human can put its mind to.

The event of achieving AGI able to reprogram and improve itself is called the technological singularity. It is hypothesized that the ability of so-called “recursive self-improvements” could enable a ‘runaway reaction’ of self-improvement cycles that would lead to an intelligence explosion and the emergence of superintelligence without the limitations of human intellect.

The famous genius mathematician John von Neumann first uses the term “singularity” in the context of technological progress causing accelerating change: “The accelerating progress of technology and changes in the mode of human life, give the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, can not continue”. It has since been echoed by many others emphasizing the very problem of the fog of war concerning the difficulty of forecasting the results of such a singularity.